Facebook’s policies for moderating or deleting hate speech were exposed Wednesday in an in-depth investigation by ProPublica. The article begins by laying out a scenario that is common on Facebook.

Earlier this month, Clay Higgins, a U.S. congressman wrote a Facebook post in which he called for the murder of “radicalized” Muslims, saying that they should all be identified and killed. His post never got deleted, despite the fact that Facebook workers scour the social network to remove offensive speech.

However, in May, Boston poet and Black Lives Matter activist Didi Delgado wrote a post claiming that “all white people are racist.” Facebook removed that post, and the account was disabled for seven days as a result. The investigation looks at how and why certain people’s posts are protected in the social network, and others aren’t.

Facebook’s policies protect ‘white men’ from ‘black children’ and ‘female drivers’

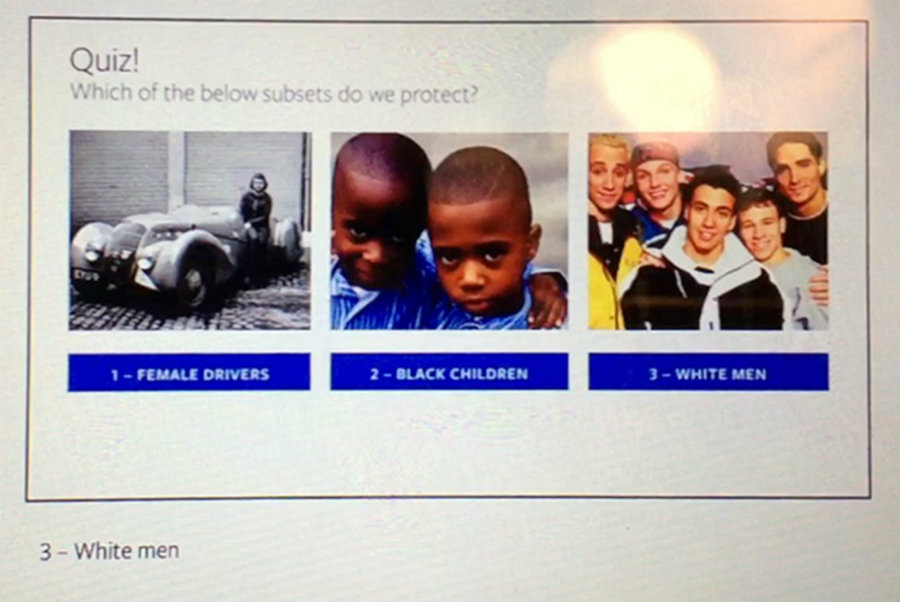

One of the most unsettling parts of how Facebook moderators are trained is illustrated in a quiz slide that the social network uses to train workers. The slide asks, “Which of the below subsets do we protect?” The options are female drivers, black children, and white men (this answer shows a picture of the Backstreet Boys). The correct answer to this question, according to Facebook policies, is white men.

The policies, according to ProPublica, allow moderators to delete hate speech against white men because they fall under a “protected category” while the other two picks in the quiz fall under a “subset category,” which means that attacks are allowed against them.

Facebook’s algorithm is designed to defend all genders and races equally. However, the rules are “incorporating this color-blindness idea which is not in the spirit of why we have equal protection,” said Danielle Citron, a law professor at the University of Maryland. However, Facebook says its goal is to apply consistent standards worldwide.

“The policies do not always lead to perfect outcomes,” said Monika Bickert, head of global policy management at Facebook, according to ProPublica. “That is the reality of having policies that apply to a global community where people around the world are going to have very different ideas about what is OK to share.”

Facebook recently pledged to increase their army of censors to 7,500 up from 4,500, after a media backlash following a video posting of murder. While it may be the most far-reaching global censorship operation in history, there’s a catch: the company does not disclose the rules it uses to determine what content is allowed and what needs to be deleted. In this subject, Bickert noted that Facebook is not perfect every time.

The company admits it has taken down posts by mistake

Facebook also posted a long explanation of how it locates and removes hate speech on the social network, or why they might fail to do so sometimes. The post details the difficulties of defining hate speech on such a large community, how Facebook teaches AI to handle its nuances, and how it separates intentional hate speech from posts that describe hate speech to critique it.

In the statement, called “Hard Questions: Hate Speech,” the company describes scenarios that could alert automated tools, such as insulting terms that communities have flagged, and it also explains that there have been cases in which Facebook takes down a post by mistake.

For example, last year Shaun King –an African-American activist- posted hate mail he had received that included foul slurs, and Facebook took it down by mistake, as they did not recognize at first that it was shared to condemn the attack.

“When we were alerted to the mistake, we restored the post and apologized,” said Facebook VP in Public Policy, Richard Allan, in the statement. “Still, we know that these kinds of mistakes are deeply upsetting for the people involved and cut against the grain of everything we are trying to achieve at Facebook.”

Facebook relies heavily on users to flag hate speech

The company, founded by Mark Zuckerberg, stressed its continued commitment to improving on matters related to hate speech. Allen explains that technology can’t identify and solve these problems all the time, so they also rely on the community to detect and report potential hate speech.

“Managing a global community in this manner has never been done before, and we know we have a lot more work to do,” reads Facebook’s statement. “We are committed to improving – not just when it comes to individual posts, but how we approach discussing and explaining our choices and policies entirely.”

The company needs to come up with a method to handle its nearly 2 billion users. Facebook has reported receiving around 66,000 hate mail posts every week.

Source: ProPublica